The SparkDev AI Team.

Author: Adrian Perez

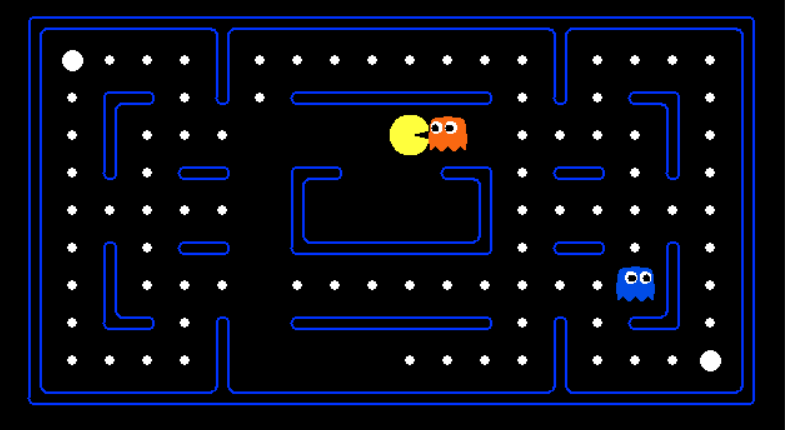

Teaching Pac-Man the Value of Life

Recently we had just began the training phase of our Pac-Man reinforcement learning project. In case you guys aren't in the loop; reinforcement learning is the method of letting an agent (in this case Pac-Man) run wild, then after the fact rewarding (or punishing) them depending on actions taken during the attempt. To give a simple rundown of our plan: 1. Reward Pac-Man for eating pellets. 2. Reward Pac-Man for eating ghosts. 3. Punish Pac-Man for getting captured by a ghost. 4. Punish Pac-Man for living too long. (after all, we want him to complete levels quickly) Now the plan was to toy with the values that we reward/punish him with varying values until we yield the desired results. Once we ran our first test, we ran into some unexpected results... Pac-Man was suicidal. Due to our rewards not properly conveying the behaviors that we wanted Pac-Man to learn, we ended up watching a strange interaction. Pac-Man would sit in place and at the first sight of the ghost, he would head straight for them attempting to end his life as soon as possible. Since this also confused the ghost AI it would look like something out of a Benny Hill skit with Pac-Man chasing the ghost across the map while the ghost had no idea of how to handle the situation. We clearly have a ways to go, but that first interaction was a very interesting moment, and really defied all expectation for how we felt our first training session was about to go.